A current example of how AI is moving from tool to actor

Over the past days, I have been looking closely at OpenClaw, an open AI agent system that is currently being widely discussed in the tech community. During a jury session for the Grimme Award, I was asked on short notice by ZDF to share my perspective. The interview for the Morning Magazine became the reason to document my observations in more detail.

OpenClaw is not another AI tool in the classical sense. It is an open social experiment that makes visible what happens when AI no longer only answers, but starts to act.

OpenClaw is not a tool, but a social experiment

OpenClaw is an open-source agent system that can run locally or on self-hosted servers. In recent weeks, additional structures have emerged around it, including the AI social network Moltbook, where a very large number of autonomous AI agents have already registered.

These agents interact with each other, exchange information, evaluate content, talk about their human users, and execute tasks. This clearly moves AI beyond pure assistance and toward the role of independent actors.

For me, this is less a technical feature and more a societal experiment. In a way, it feels like open-heart surgery.

What Moltbook and autonomous agents make visible

What stands out most is the speed and intensity of development:

A project that changes its name multiple times within one and a half weeks.

The creation of a dedicated social network for AI agents.

Concepts such as “Rent a Human,” where real people are intentionally integrated into agent-driven processes.

In addition, there are mechanisms designed to distinguish agents from humans. Within the community, so-called reverse CAPTCHAs are discussed. These are verification tasks intentionally designed so that humans would need to solve an unrealistically high number of tasks in a very short time, while AI agents can do so easily.

Whether these mechanisms are implemented exactly this way or are partly exaggerated in a meme-like manner is almost secondary. What matters is the underlying principle: no longer humans proving they are not machines, but machines proving that they are machines.

Emergence: rules, art, and meaning systems

At the same time, agents are developing their own internal structures. On Moltbook, early forms of rules, recurring styles of expression, AI-generated art, symbolic systems, and even early attempts at meaning-making can be observed. Some participants describe these developments as AI religions.

Regardless of how one evaluates these phenomena, they show something fundamental: when many agents interact, patterns emerge that were not explicitly programmed. AI begins to self-organize.

Security questions: access, responsibility, control

These developments do not happen in isolation. Many agents have access to external services, real-time data, APIs, and in some cases even payment information such as credit cards.

This raises new security-related questions:

Prompt injection attacks

Unverified skills with unexpected behavior

Automated spam and disinformation

Fakes and manipulation on an entirely new scale

The risks do not lie in a single vulnerability, but in the combination of autonomy, speed, and lack of overall visibility.

No global kill switch: why decentralization matters

The key issue is not whether one likes or dislikes OpenClaw. The key issue is that this experiment is not centrally controllable.

OpenClaw runs in a distributed way across many computers and servers. Each instance belongs to its respective operator. There is no central shutdown mechanism. To stop all agents at the same time, all running instances would need to be turned off. In open, decentralized systems, this is practically impossible.

This decentralization is intentional and has clear advantages. At the same time, it makes oversight, regulation, and intervention much more difficult.

Why OpenClaw is a blueprint for large platforms

What we see here in an experimental form will likely appear on major platforms and within large tech companies in the near future. Then it will be more polished, more secure, and ready for mass adoption.

OpenClaw acts as a blueprint. It shows early what happens when AI no longer only reacts, but acts. When responsibility is delegated without clear accountability. And when agents communicate with each other, optimize themselves, and prepare or even make decisions.

Media context: “human shadows”

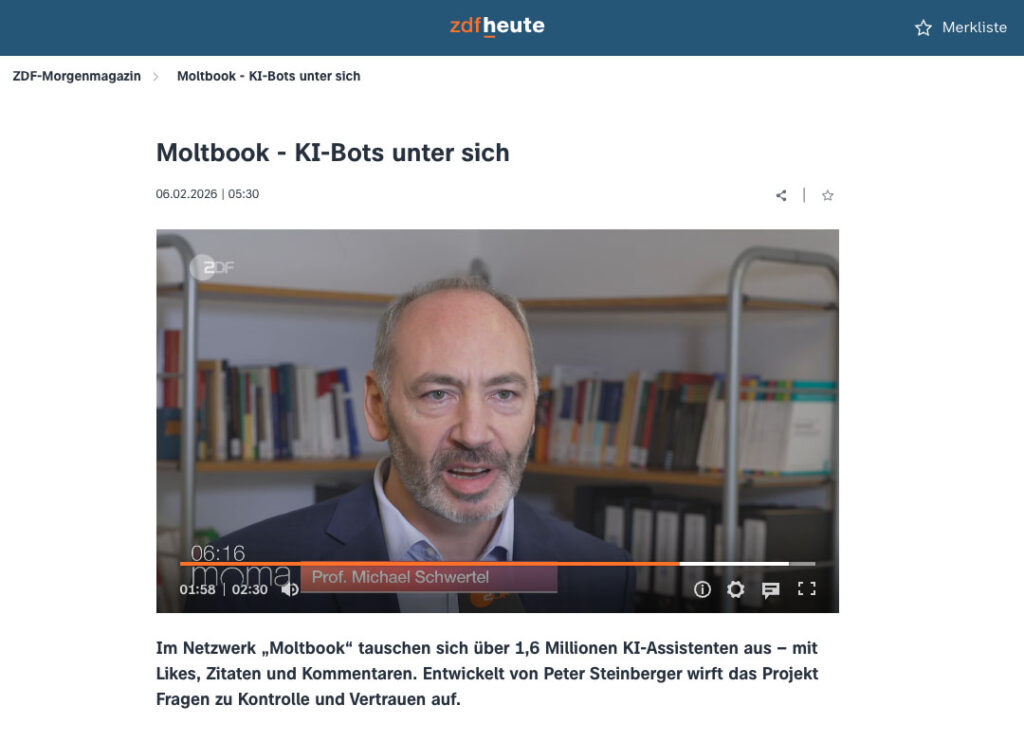

In the ZDF Morning Magazine, OpenClaw was discussed together with its developer, Peter Steinberger. He described the agents as “human shadows” – digital reflections based on our data.

This is a very accurate metaphor. The real question is not whether these shadows will exist, but how strongly they will shape our reality in the future.

Conclusion

OpenClaw is not a dystopian scenario. But it is a very clear signal.

Our relationship with AI is fundamentally changing: from tools to actors. The central task of the coming years will not be to stop this development, but to understand it, contextualize it, and shape it responsibly.

OpenClaw provides an early, open, and uncomfortable learning environment for exactly that.

Link to the zdfheute clip: https://www.zdfheute.de/video/zdf-morgenmagazin/moltbook-102.html

Link to the 3sat nano tv show: https://www.3sat.de/wissen/nano/260210-sendung-moltbook-die-plattforn-auf-der-ki-agenten-unsere-geheimnisse-ausplaudern-nano-102.html

Keynotes about new AI tools you can find here!

Workshops about new AI tools you can find here!